AI

DeepSeek-OCR: Why AI Researchers Are Rethinking Text Itself

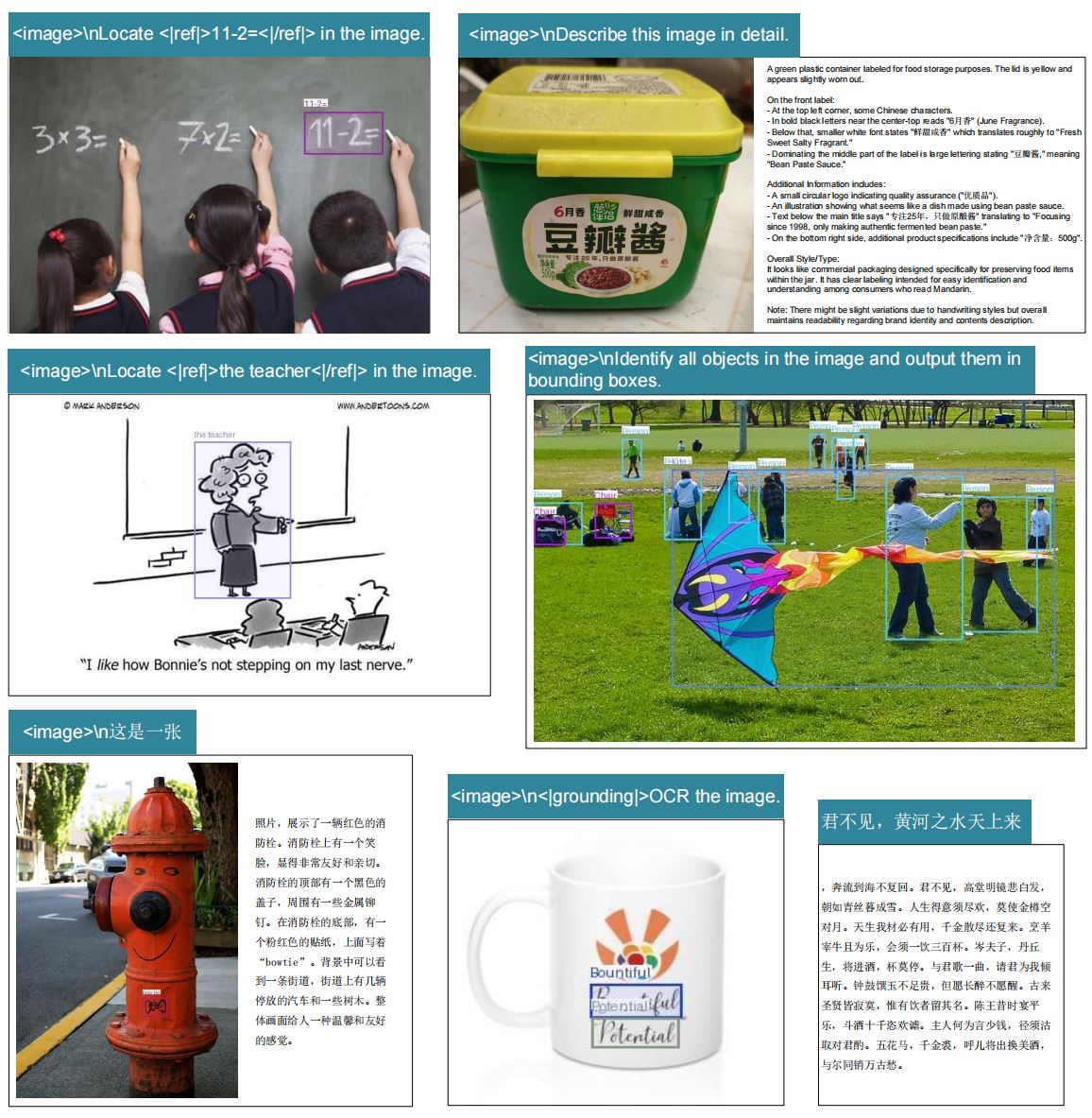

DeepSeek-OCR achieved 10× text compression with 97% accuracy by treating images as more efficient information carriers than text tokens—a radical approach that could solve AI's context length problem and has researchers questioning whether language models should process text at all.

On October 20, 2025, DeepSeek released a 3-billion-parameter model that sparked an unexpected debate: should AI systems even use text tokens at all? Within 24 hours, DeepSeek-OCR gained over 4,000 stars on GitHub and caught the attention of Andrej Karpathy, OpenAI co-founder and former Tesla AI director, who called it more interesting than just “another OCR model.”

The fuss isn’t about reading text from images better—it’s about a radical idea that could reshape how large language models work.

What Is OCR and Why Does It Matter?

Optical Character Recognition (OCR) is the technology that converts images of text into digital, editable text. Point your phone at a business card, and the app extracts the contact information—that’s OCR at work.

Traditional OCR has been around for decades, powering everything from:

- Document digitization: Converting paper archives into searchable databases

- Receipt scanning: Expense tracking apps reading purchase information

- License plate recognition: Traffic cameras identifying vehicles

- PDF text extraction: Making scanned documents searchable and copyable

- Accessibility tools: Screen readers for visually impaired users

- Form processing: Automating data entry from paper forms

Modern OCR isn’t just about recognizing letters. Advanced systems need to understand:

- Complex layouts (multi-column text, tables, headers)

- Mathematical formulas and scientific notation

- Charts and diagrams

- Handwritten text

- Multiple languages simultaneously

- Document structure and hierarchy

This matters enormously for AI training. Large language models learn from vast text corpora—often extracted from PDFs, scanned books, and documents. Better OCR means better training data, which means smarter AI.

But DeepSeek-OCR isn’t trying to solve OCR. It’s using OCR to solve something much bigger: the context length problem that’s been plaguing AI for years.

The Context Problem: Why LLMs Choke on Length

Large language models have a notorious weakness: they struggle with long documents. The problem is mathematical—processing text with transformers requires quadratic computation relative to sequence length. Double the text length, and you roughly quadruple the computational cost.

The token bottleneck: When you feed text to an LLM, it first converts words into “tokens”—numerical representations that the model processes. A 100,000-word document might consume 150,000+ tokens. Processing that many tokens requires:

- Massive memory (gigabytes per request)

- Expensive computation (every token attends to every other token)

- Slower response times (latency increases dramatically)

- Higher operational costs (more GPUs, more electricity)

Current solutions are band-aids:

- Truncation: Just cut off the document (lose information)

- Chunking: Split into pieces and process separately (lose coherence)

- Retrieval: Extract only relevant sections (miss context)

- Compression techniques: Summarize aggressively (lose nuance)

What if there’s a completely different approach? What if text doesn’t need to be text at all?

The Insight: Images Are More Efficient Than Text

Here’s the counterintuitive realization at the heart of DeepSeek-OCR: a picture of text contains more information per unit than the text itself.

Consider a page with 1,000 words. Traditional processing:

- Tokenize the text: ~1,500 text tokens

- Process through transformer: quadratic attention over 1,500 tokens

- Memory and compute scale badly

DeepSeek’s approach:

- Convert the page into an image

- Compress it into ~100-150 “vision tokens”

- Process through transformer: quadratic attention over just 150 tokens

- 10x compression with 97% accuracy

This works because images encode information densely. A photograph of a page captures:

- Every character (including rare symbols)

- Layout and spacing (which conveys meaning)

- Visual structure (headers, footnotes, emphasis)

- Font and styling (which humans use for comprehension)

Language is redundant by nature. Written text includes tons of predictable patterns—articles, conjunctions, common phrases. Visual representation compresses this redundancy naturally. The shapes and patterns on a page encode meaning more efficiently than sequential tokens.

The DeepSeek-OCR Paper: Technical Deep Dive

DeepSeek’s research paper, “DeepSeek-OCR: Contexts Optical Compression,” published on arXiv on October 21, 2025, provides the technical foundation for their claims. Let’s break down what makes this work significant.

Architecture: Two-Stage Design

DeepSeek-OCR consists of two components: DeepEncoder (the vision encoder) and DeepSeek3B-MoE-A570M (the decoder).

DeepEncoder (~380M parameters): The encoder combines an 80M SAM-base model for local perception using window attention with a 300M CLIP-large model for global understanding using dense attention, connected by a 16× convolutional compressor.

This is clever engineering. The SAM component handles fine-grained details (individual characters, small features) using window attention, which is computationally cheap because it only looks at local neighborhoods. The CLIP component captures broader context (document layout, overall structure) using global attention.

Between them sits a convolutional compressor that performs 16× downsampling. Here’s the workflow:

- Input a 1024×1024 image

- SAM divides it into 4,096 patch tokens

- Compressor reduces these to just 256 vision tokens

- CLIP processes the compressed representation

This architecture maintains low memory usage even with high-resolution inputs—a critical requirement for practical deployment.

DeepSeek3B-MoE Decoder (~3B parameters, 570M active): The decoder is a Mixture-of-Experts language model where 6 out of 64 experts activate per step. This design gives the model the expressiveness of a 3-billion-parameter system while only activating 570 million parameters during inference—dramatically improving speed and efficiency.

The Compression Results: Numbers That Matter

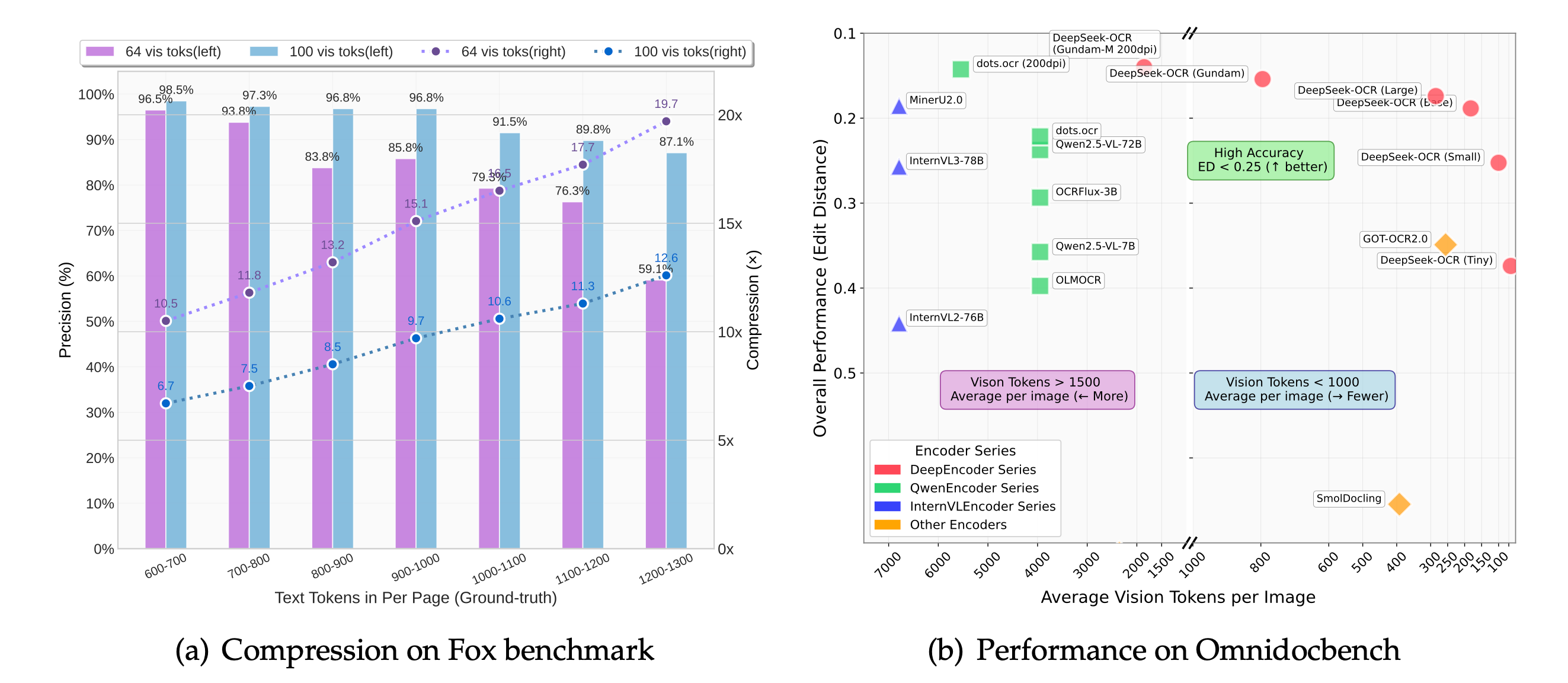

The paper’s core finding revolves around compression ratios and accuracy:

When the number of text tokens is within 10 times that of vision tokens (compression ratio < 10×), the model achieves 97% decoding precision. Even at 20× compression, OCR accuracy remains around 60%.

Let’s unpack what this means:

10× compression with 97% accuracy:

- Original document: 1,000 text tokens

- DeepSeek representation: ~100 vision tokens

- Text recovered with 97% character-level accuracy

- Effective lossless compression for practical purposes

20× compression with 60% accuracy:

- Original document: 2,000 text tokens

- DeepSeek representation: ~100 vision tokens

- Still captures majority of content

- Potentially useful for older context or summaries

The researchers tested on Fox benchmarks with documents containing 600-1,300 tokens. Using just 100 vision tokens (640×640 resolution), they achieved 96.8% precision for documents with 900-1,000 text tokens—a compression ratio of approximately 9.7×.

Multi-Resolution Modes: Flexibility in Practice

One of the paper’s practical innovations is supporting multiple resolution modes for different use cases:

DeepEncoder supports several resolution modes: Tiny (512×512, 64 tokens), Small (640×640, 100 tokens), Base (1024×1024, 256 tokens), Large (1280×1280, 400 tokens), and Gundam mode which combines multiple views.

This flexibility allows users to trade off between:

- Speed: Lower resolution = fewer tokens = faster processing

- Accuracy: Higher resolution = more tokens = better OCR precision

- Memory: Fewer tokens = less GPU memory required

For a simple document, Tiny mode with 64 tokens might suffice. For a complex academic paper with equations and diagrams, Large or Gundam mode provides the necessary detail.

Training Data: Scale and Diversity

The model was trained on 30 million PDF pages covering approximately 100 languages, with Chinese and English accounting for 25 million pages. Training data includes nine document types—academic papers, financial reports, textbooks, newspapers, handwritten notes, and others.

But they didn’t stop at traditional OCR:

The training incorporated “OCR 2.0” data: 10 million synthetic charts, 5 million chemical formulas, and 1 million geometric figures, plus 20% general vision data and 10% text-only data to maintain language capabilities.

This diverse training enables DeepSeek-OCR to handle not just text, but:

- Scientific notation and equations

- Chemical structural formulas

- Charts (line, bar, pie, composite)

- Geometric diagrams

- Natural images

- Mixed-content documents

Benchmarks: State-of-the-Art Performance

The paper demonstrates competitive or superior performance on standard benchmarks:

OmniDocBench results: DeepSeek-OCR surpasses GOT-OCR2.0 (which uses 256 tokens per page) using only 100 vision tokens, and outperforms MinerU2.0 (averaging 6,000+ tokens per page) while utilizing fewer than 800 vision tokens.

This isn’t just incremental improvement—it’s achieving better results with 10-20× fewer tokens. That translates directly to:

- Faster inference (less computation per page)

- Lower memory requirements (more concurrent requests)

- Reduced costs (fewer GPUs needed)

- Higher throughput (more pages processed per second)

Production capability: In production, DeepSeek-OCR can generate training data for LLMs/VLMs at a scale of 200,000+ pages per day on a single A100-40G GPU.

For context, generating high-quality training data is a massive bottleneck in AI development. Being able to process 200,000 pages daily on one GPU (which costs a few dollars per hour) is economically transformative for dataset creation.

The Memory Decay Hypothesis

Perhaps the paper’s most speculative but intriguing idea is applying this to context management:

The researchers suggest that for older contexts in multi-turn conversations, they could progressively downsize the rendered images to reduce token consumption, mirroring how human memory decays over time and visual perception degrades over spatial distance.

Imagine an AI conversation where:

- Current turn: Full resolution (1024×1024, 256 tokens)

- 2 turns ago: Medium resolution (640×640, 100 tokens)

- 5 turns ago: Low resolution (512×512, 64 tokens)

- 10 turns ago: Thumbnail (256×256, 16 tokens)

Recent context stays sharp, but older exchanges naturally “fade” in detail while still contributing to coherence. This mimics biological memory—you remember recent conversations in detail but older ones become fuzzy summaries.

The potential: theoretically unlimited context windows by compressing history optically rather than throwing it away.

Why This Matters: Implications Beyond OCR

The immediate reaction from the AI community wasn’t about OCR performance—it was about what this means for how we build AI systems.

Andrej Karpathy’s Perspective

Karpathy emphasized that DeepSeek-OCR could solve issues with traditional tokenizers, which he described as “ugly and standalone,” introducing Unicode and byte encoding issues and increasing security risks. He proposed that LLMs should use images as their primary input, and even when processing plain text, they should render it into an image first.

This is a radical statement from someone who helped build GPT. He’s suggesting text-to-text processing might be the wrong paradigm. Instead:

- Take any text input

- Render it as an image

- Process through vision encoder

- Generate output

Why would this be better?

Information density: Images lead to much higher information compression and more generalized information flow.

Tokenizer problems eliminated: Current tokenizers are brittle. They struggle with:

- Rare languages and scripts

- Special characters and emojis

- Code with unusual syntax

- Byte-level encoding ambiguities

- Security vulnerabilities (prompt injection via unusual tokens)

Vision-based processing sidesteps all of these. An image is an image—no special cases, no encoding issues, no tokenizer vulnerabilities.

Competing with Gemini’s Context Windows

Some researchers speculate that Google may have already implemented similar optical compression techniques, potentially explaining why Gemini offers a 1-million-token context window with plans to expand to 2 million, far exceeding competitors.

If Gemini is using visual compression internally, it would explain their massive context advantage:

- GPT-4 Turbo: 128,000 tokens

- Claude 3.5 Sonnet: 200,000 tokens (1M in beta)

- Gemini 2.5 Pro: 1,000,000 tokens (expanding to 2M)

Google hasn’t disclosed their methods, but the math works out. If you’re compressing at 10×, a 1-million-token visual context represents 10 million text tokens of effective memory—far beyond what traditional transformers can handle.

The Open Questions

Researchers acknowledge important unknowns:

It’s unclear how visual token compression interacts with downstream cognitive functioning of an LLM. Can the model reason as intelligently over compressed visual tokens as it can using regular text tokens? Does it make the model less articulate by forcing it into a more vision-oriented modality?

These are critical questions. DeepSeek’s paper focuses on compression-decompression (can you recover the text?), but doesn’t deeply explore:

- Reasoning performance: Does the model understand and reason over visual context as well as text?

- Generalization: Do skills learned via text transfer to visual inputs and vice versa?

- Efficiency trade-offs: Is compressing to vision tokens, then decompressing for reasoning, actually faster than just processing text?

- Quality degradation: At what compression ratio does reasoning quality drop significantly?

The paper provides initial evidence that it works for OCR tasks. Whether it works equally well for complex reasoning, multi-step logic, or creative generation remains to be proven.

Practical Impact: What Changes Now?

For AI Development

Training data pipelines: Companies training large models can now:

- Process PDFs 10× faster

- Extract text from images more reliably

- Handle multilingual documents seamlessly

- Include visual structure in training data

At scale, using 20 nodes with 8 A100 GPUs each, DeepSeek-OCR can produce 33 million pages of training data per day—game-changing for dataset creation.

Context windows: If optical compression proves effective for reasoning tasks, we might see:

- LLMs with multi-million-token context windows

- Long-document understanding without chunking

- Full-book comprehension in a single pass

- Entire codebase analysis at once

Architecture changes: Future models might integrate vision encoders by default, treating all inputs as images rather than pure text. This could unify text, image, and multimodal processing into a single pipeline.

For End Users

Better document AI: Applications that process documents—legal tech, finance, healthcare, education—will benefit from:

- More accurate text extraction

- Better handling of complex layouts

- Reliable formula and table parsing

- Faster processing times

Longer conversations: Chat interfaces could maintain vastly longer conversation histories without forgetting earlier context or slowing down.

Multilingual accessibility: 100-language support means better tools for global communication, translation, and information access.

Why Open Source Matters

DeepSeek-OCR is fully open-source, available on GitHub and Hugging Face with 3 billion parameters. The model has been downloaded over 730,000 times in its first month and is officially supported in vLLM for production deployment.

This openness accelerates research and deployment:

- Researchers can experiment with variations

- Companies can integrate it into products

- The community can identify limitations and improvements

- Alternative approaches can be benchmarked against it

Compare this to proprietary models where the techniques remain secret. DeepSeek’s transparency lets everyone build on their work.

The Bigger Picture: Rethinking Modalities

DeepSeek-OCR represents a broader trend: the convergence of vision and language models.

Traditional AI separated:

- NLP models (text-only)

- Computer vision models (images-only)

- Multimodal models (combining both)

The future might not maintain these distinctions. If text-as-image is more efficient, why separate them? A unified architecture that processes all information visually could be simpler, more general, and more powerful.

This aligns with how humans work. We don’t process text and images through completely separate cognitive systems—they’re integrated. Reading activates visual cortex. Understanding diagrams involves linguistic reasoning. Vision and language are deeply intertwined.

AI systems are starting to mirror this integration. DeepSeek-OCR is one step in that direction.

Conclusion: A New Direction, Not Just a Tool

DeepSeek-OCR went viral not because it’s the best OCR system (though it’s very good), but because it challenges fundamental assumptions about how AI should work.

The traditional view:

- Text is the native format for language models

- Vision is a separate capability bolted on

- Longer context requires bigger models or clever compression

DeepSeek’s proposal:

- Vision might be the native format

- Text is just one type of visual pattern

- Longer context requires rethinking representation

Whether this specific approach becomes standard remains to be seen. The compression-decompression paradigm has limitations, and reasoning over visual tokens needs more validation.

But the core insight—that images can represent text more efficiently than text tokens—is profound. It suggests we might be building language models wrong, optimizing for text-native processing when vision-native might be fundamentally better.

As Karpathy noted, this isn’t just about OCR. It’s about reconsidering what information flow in AI systems should look like. And that’s why 4,000+ researchers starred it on GitHub overnight.

DeepSeek-OCR is available at github.com/deepseek-ai/DeepSeek-OCR. The technical paper “DeepSeek-OCR: Contexts Optical Compression” is on arXiv at 2510.18234.

My Amazon Picks

As an Amazon Associate I earn from qualifying purchases.

5TH WHEEL Electric Bike for Adults

1000W peak power, a removable 468Wh battery, and trail-ready suspension make this commuter-ready e-bike a capable all-terrain ride.

- Brushless hub motor surges to 37 km/h with a 7-speed drivetrain to keep pace on climbs.

- Removable UL 2849-certified battery delivers up to 88 km with PAS and charges on or off the frame.

Join the discussion

Thoughts, critiques, and curiosities are all welcome.

Comments are currently disabled. Set the public Giscus environment variables to enable discussions.